Failed Experiment: When Google’s Lightweight MMM Turned My Ads into Zombies

Prologue: The Experiment Nobody Warned Me About

Let’s rewind. I’m a data scientist who has spent more years than I’d like to admit tinkering with Marketing Mix Modelling (MMM). I’ve worked with big media agencies, publishers, and brands, and I’ve seen the good, the bad, and the “how the hell did that pass as statistics” of marketing analytics.

So when Google released Lightweight MMM — an open-source Python package promising a faster, easier, Bayesian way to measure media effectiveness — I was hooked. Free, powerful, and with Google’s shiny logo attached? Sign me up.

But like most free experiments in life (free tattoos, free food samples from a sketchy street vendor, free trial SaaS tools that need three days of debugging before running), what looks good upfront often leaves scars.

What started as excitement quickly spiralled into one of the strangest episodes of my MMM career. And it all revolved around one thing: zombie ads.

Falling in Love with Lightweight MMM

If Meta’s Robyn was a messy love affair (remember the AWS bills and evolutionary computing nightmare?), Google’s Lightweight MMM felt different. It was cleaner.

Installation was a breeze:

pip install lightweight_mmm

No need to spin up half of AWS just to get a model to converge. No evolutionary computing black hole. Just Python, some JAX magic, and Bayesian priors. Honestly, the first time I ran it, I thought:

“This might be it. This might actually save me from the endless cycle of agency black-box MMM models and overpriced consultants.”

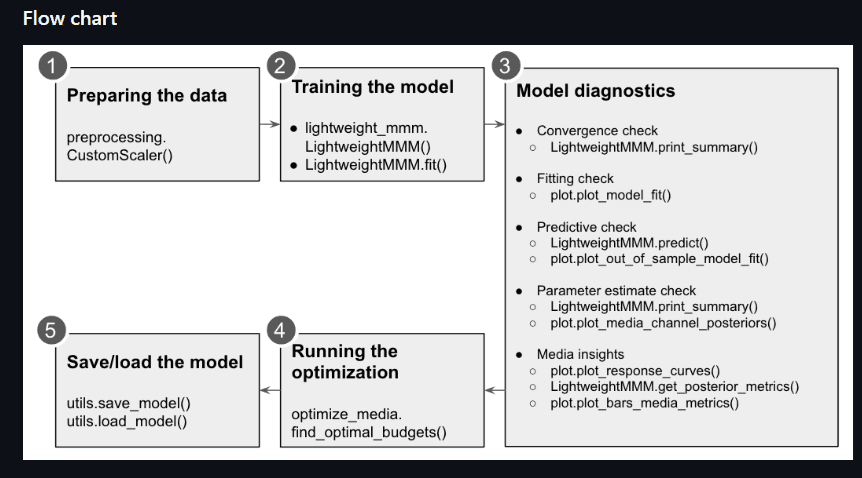

The package had everything a data scientist dreams of:

- Bayesian regression models baked in

- Adstock transformations for media lag effects

- Diminishing returns functions to model saturation

- Forecasting tools to predict scenarios

- And, of course, the warm glow of Google’s name

It looked like an MMM playground where data people like me could build elegant, transparent, trustworthy models.

But like any playground, it came with rusty swings and broken slides.

Enter the Zombies (a.k.a. Adstock Gone Wrong)

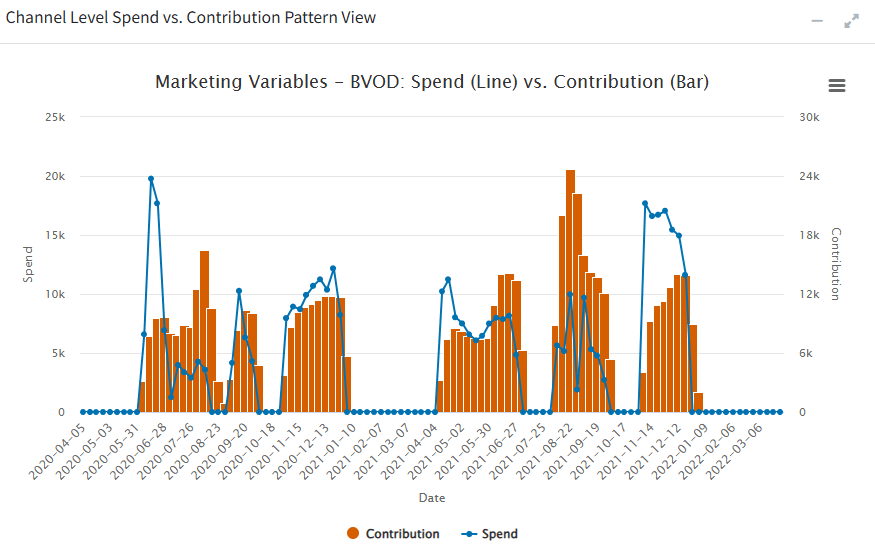

The real trouble started when I fed in my first media dataset. A mix of digital, TV, and other media spends over a couple of years. I applied the default geometric adstock transformation. Everything looked fine.

Until the results came back.

One media channel — let’s call it “Radio” to protect the innocent — showed ROI numbers that defied capitalism, physics, and human intelligence.

According to Lightweight MMM, every dollar I put into radio advertising was delivering so much incremental revenue that I should have mortgaged my house and bought out every radio station in the country.

But here’s the kicker: even after I stopped spending on radio in the dataset, the model insisted radio was still delivering revenue.

Weeks later. Months later. Like a zombie crawling out of the grave, still dragging incremental sales behind it.

This wasn’t advertising anymore. This was necromancy.

The Beauty and the Horror of Bayesian Stats

Now, to be clear: Lightweight MMM wasn’t “wrong” in a statistical sense. The model was doing exactly what it was told: applying adstock decay, fitting priors, and finding the posterior distributions that maximized likelihood.

The problem? The defaults were too generous.

- The adstock parameter wasn’t constrained tightly enough, which meant the decay rate let media effects linger… forever

- Diminishing returns weren’t kicking in early enough, so spending looked like it had infinite upside

- Priors were set in a way that gave too much credibility to weak signals

In Bayesian language, it was elegant. In commercial language, it was insane.

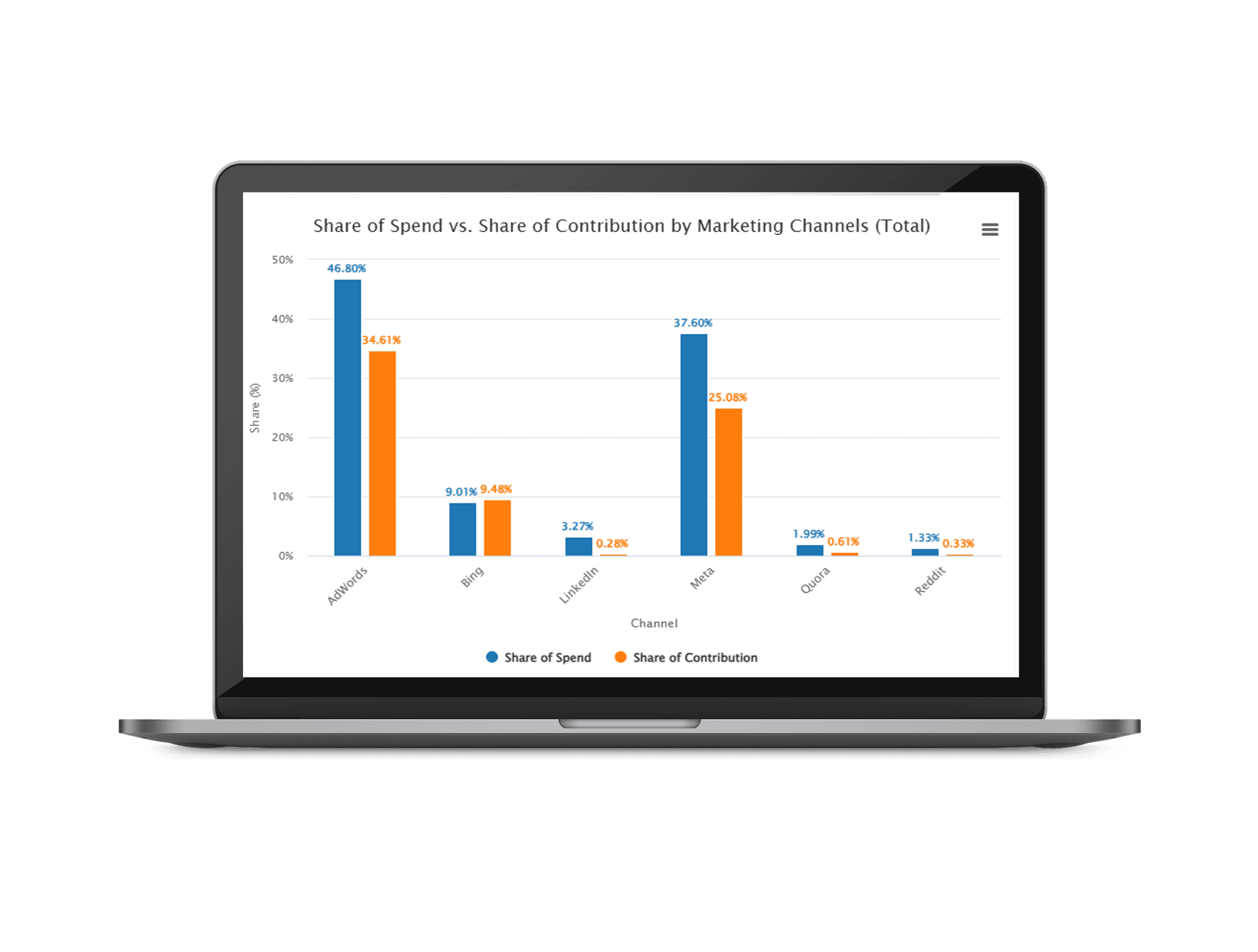

This is where marketers and data scientists live in different universes. A data scientist sees pretty posterior plots. A marketer sees a recommendation to double their spend on a channel that hasn’t run an ad in three months.

And if you deliver that to a CMO? You’re not getting invited back.

We’ve developed an updated Bayesian regression approach that uses constraints to regulate coefficient sampling, reducing the heavy reliance on prior definitions and assumptions. Instead of depending solely on priors, we incorporate the expected impact share for each variable directly into the likelihood function, alongside the priors, data, and constraints. If you’re interested, you can learn more in our latest blog post: Upgrading Google Meridian: A Constrained Bayesian Approach for Robust Marketing Mix Modelling.

The Zombie Apocalypse in the Boardroom

I’ll never forget the first time I presented one of these Lightweight MMM results to a marketing team.

I walked them through the model structure. I showed them the tidy Bayesian intervals. I presented the channel-level ROI.

The client stared at the slide. Then someone said:

“Wait. Are you telling me radio is still selling our product, even though we haven’t run radio since last year?”

Silence.

I scrambled. “Well, technically, what the model suggests is that there are lingering effects from brand equity…”

They cut me off.

“So basically… you’re saying our dead radio ads are zombies?”

I laughed nervously. They didn’t.

That was the moment I realized: what looks like statistical beauty to us is commercial nonsense to them.

Why This Matters (and Why It’s Not Google’s Fault)

Here’s the truth: I don’t blame Google. Lightweight MMM is a gift to the data science community. It’s transparent, free, and pushes MMM out of the agency black box.

But it’s not built for marketers.

It’s built for data scientists who:

- Understand how to set priors

- Know when and where to constrain adstock parameters

- Can interpret credible intervals instead of taking point estimates as gospel

- Have the patience to debug why ROI looks like it was written by a drunk economist

Marketers? They want clean answers. They want ROI that matches reality. They don’t care if your posterior has a 95% credible interval. They care if they can trust the numbers enough to take them to their CFO without getting laughed out of the room.

And trust me, no CFO believes in zombie ads.

Lessons from the Graveyard

Here’s what I learned (the hard way) from my Lightweight MMM experiment:

- Adstock needs guardrails

Never let adstock decay rates run free. If you don’t constrain them, you’ll end up with immortal ads. - Priors are everything

Lightweight MMM makes Bayesian easy, but the defaults are too weak for commercial use. Tailor your priors or prepare for weirdness. - Marketers need auditability, not just plots

When results don’t match reality, you need a framework to debug and explain, not just statistical outputs. - Open source ≠ ready for the boardroom

Data scientists can love Lightweight MMM. But hand it raw to a marketer, and you’ll burn trust faster than a dodgy influencer campaign.

Epilogue: From Zombies to Trustworthy MMM

So where does that leave us?

Lightweight MMM is a powerful experiment, but like my Meta Robyn adventure, it proved one thing: open-source MMM is too raw for most marketers. It’s brilliant for tinkering, for learning, for research. But for actual commercial decision-making? It’s a minefield.

That’s why I built More Than Data.

- No zombies. Our MMM models have guardrails so ads don’t live forever.

- No black boxes. We make the stats transparent but the outputs business-ready.

- No betrayal in the boardroom. What we deliver is audit-ready, CFO-proof, and marketer-friendly.

Because at the end of the day, marketers don’t care about pretty Bayesian plots. They care about trust.

And trust, my friends, is something zombie ads can never give you.

Final Thoughts (and a Sequel Teaser)

If you enjoyed this story, you’ll love my other failed experiment with Meta’s Robyn, where I discovered the joy of evolutionary computing eating AWS bills alive. Spoiler: it didn’t end well either.

Open-source MMM is like free street food: sometimes delicious, sometimes food poisoning. Either way, you’ll have a story.

The question is: do you want a story, or do you want results you can actually take to your CFO?

At More Than Data, we choose results.